Continuing my work in regress, this post revisits—with the benefit of much hindsight—what I was working on for my DPhil thesis (which was summarized in a paper at MPC 1992) and in subsequent papers at MPC 1998 and in SCP in 2000. This is the topic of accumulations on data structures, which distribute information across the data structure. List instances are familiar from the Haskell standard libraries (and, to those with a long memory, from APL); my thesis presented instances for a variety of tree datatypes; and the later work was about making it datatype-generic. I now have a much better way of doing it, using Conor McBride’s derivatives.

Accumulations

Accumulations or scans distribute information contained in a data structure across that data structure in a given direction. The paradigmatic example is computing the running totals of a list of numbers, which can be thought of as distributing the numbers rightwards across the list, summing them as you go. In Haskell, this is an instance of the operator:

A special case of this pattern is to distribute the elements of a list rightwards across the list, simply collecting them as you go, rather than summing them. That’s the function, and it too is an instance of

:

It’s particularly special, in the sense that it is the most basic ; any other instance can be expressed in terms of it:

This is called the Scan Lemma for . Roughly speaking, it states that a

replaces every node of a list with a

applied to that node’s predecessors. Read from right to left, the scan lemma is an efficiency-improving transformation, eliminating duplicate computations; but note that this only works on expressions

where

is a

, because only then are there duplicate computations to eliminate. It’s an important result, because it relates a clear and simple specification on the right to a more efficient implementation on the left.

However, the left-to-right operators ,

, and

are a little awkward in Haskell, because they go against the grain of the cons-based (ie, right-to-left) structure of lists. I leave as a simple exercise for the reader the task of writing the more natural

,

, and

, and identifying the relationships between them. Conversely, one can view

etc as the natural operators for snoc-based lists, which are constructed from nil and snoc rather than from nil and cons.

Upwards and downwards accumulations on binary trees

What would ,

,

, etc look like on different—and in particular, non-linear—datatypes? Let’s consider a simple instance, for homogeneous binary trees; that is, trees with a label at both internal and external nodes.

for which the obvious fold operator is

I’m taking the view that the appropriate generalization is to distribute data “upwards” and “downwards” through such a tree—from the leaves towards the root, and vice versa. This does indeed specialize to the definitions we had on lists when you view them vertically in terms of their “cons” structure: they’re long thin trees, in which every parent has exactly one child. (An alternative view would be to look at distributing data horizontally through a tree, from left to right and vice versa. Perhaps I’ll come back to that another time.)

The upwards direction is the easier one to deal with. An upwards accumulation labels every node of the tree with some function of its descendants; moreover, the descendants of a node themselves form a tree, so can be easily represented, and folded. So we can quite straightforwardly define:

where yields the root of a tree:

As with lists, the most basic upwards scan uses the constructors themselves as arguments:

and any other scan can be expressed, albeit less efficiently, in terms of this:

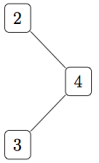

The downwards direction is more difficult, though. A downwards accumulation should label every node with some function of its ancestors; but these do not form another tree. For example, in the homogeneous binary tree

the ancestors of the node labelled are the nodes labelled

. One could represent those ancestors simply as a list,

; but that rules out the possibility of a downwards accumulation treating left children differently from right children, which is essential in a number of algorithms (such as the parallel prefix and tree drawing algorithms in my thesis). A more faithful rendering is to define a new datatype of paths that captures the left and right turns—a kind of non-empty cons list, but with both a “left cons” and a “right cons” constructor:

(I called them “threads” in my thesis.) Then we can capture the data structure representing the ancestors of the node labelled

by the expression . I leave it as an exercise for the more energetic reader to work out a definition for

to compute the tree giving the ancestors of every node, and for a corresponding .

Generic upwards accumulations

Having seen ad-hoc constructions for a particular kind of binary tree, we should consider what the datatype-generic construction looks like. I discussed datatype-generic upwards accumulations already, in the post on Horner’s Rule; the construction was given in the paper Generic functional programming with types and relations by Richard Bird, Oege de Moor and Paul Hoogendijk. As with homogeneous binary trees, it’s still the case that the generic version of labels every node of a data structure of type

with the descendants of that node, and still the case that the descendants form a data structure also of type

. However, in general, the datatype

does not allow for a label at every node, so we need the labelled variant

where

. Then we can define

where returns the root label of a labelled data structure—by construction, every labelled data structure has a root label—and

is the unique arrow to the unit type. Moreover, we get a datatype-generic

operator, and a Scan Lemma:

Generic downwards accumulations, via linearization

The best part of a decade after my thesis work, inspired by the paper by Richard Bird & co, I set out to try to define datatype-generic versions of downward accumulations too. I wrote a paper about it for MPC 1998, and then came up with a new construction for the journal version of that paper in SCP in 2000. I now think these constructions are rather clunky, and I have a better one; if you don’t care to explore the culs-de-sac, skip this section and the next and go straight to the section on derivatives.

The MPC construction was based around a datatype-generic version of the datatype above, to represent the “ancestors” of a node in an inductive datatype. The tricky bit is that data structures in general are non-linear—a node may have many children—whereas paths are linear—every node has exactly one child, except the last which has none; how can we define a “linear version”

of

? Technically, we might say that a functor is linear (actually, “affine” would be a better word) if it distributes over sum.

The construction in the paper assumed that was a sum of products of literals

where each is either

,

, or some constant type such as

or

. For example, for leaf-labelled binary trees

the shape functor is , so

(there are two variants),

(the first variant has a single literal,

) and

(the second variant has two literals,

and

), and:

Then for each we define a

-ary functor

, where

is the “degree of branching” of variant

(ie, the number of

s occurring in

, which is the number of

for which

), in such a way that

and is linear in each argument except perhaps the first. It’s a bit messy explicitly to give a construction for

, but roughly speaking,

where is “the next unused

” when

, and just

otherwise. For example, for leaf-labelled binary trees, we have:

Having defined the linear variant of

, we can construct the datatype

of paths, as the inductive datatype of shape

where

That is, paths are a kind of non-empty cons list. The path ends at some node of the original data structure; so the last element of the path is of type , which records the “local content” of a node (its shape and labels, but without any of its children). Every other element of the path consists of the local content of a node together with an indication of which direction to go next; this amounts to the choice of a variant

, followed by the choice of one of

identical copies of the local contents

of variant

, where

is the degree of branching of variant

. We model this as a base constructor

and a family of “cons” constructors

for

and

.

For example, for leaf-labelled binary trees, the “local content” for the last element of the path is either a single label (for tips) or void (for bins), and for the other path elements, there are zero copies of the local content for a tip (because a tip has zero children), and two copies of the void local information for bins (because a bin has two children). Therefore, the path datatype for such trees is

which is isomorphic to the definition that you might have written yourself:

For homogeneous binary trees, the construction gives

which is almost the ad-hoc definition we had two sections ago, except that it distinguishes singleton paths that terminate at an external node from those that terminate at an internal one.

Now, analogous to the function which labels every node with its descendants, we can define a function

to label every node with its ancestors, in the form of the path to that node. One definition is as a fold; informally, at each stage we construct a singleton path to the root, and map the appropriate “cons” over the paths to each node in each of the children (see the paper for a concrete definition). This is inefficient, because of the repeated maps; it’s analogous to defining

by

A second definition is as an unfold, maintaining as an accumulating parameter of type the “path so far”; this avoids the maps, but it is still quadratic because there are no common subexpressions among the various paths. (This is analogous to an accumulating-parameter definition of

:

Even with an accumulating “Hughes list” parameter, it still takes quadratic time.)

The downwards accumulation itself is defined as a path fold mapped over the paths, giving a Scan Lemma for downwards accumulations. With either the fold or the unfold definition of paths, this is still quadratic, again because of the lack of common subexpressions in a result of quadratic size. However, in some circumstances the path fold can be reassociated (analogous to turning a into a

), leading finally to a linear-time computation; see the paper for the details of how.

Generic downwards accumulations, via zip

I was dissatisfied with the “…”s in the MPC construction of datatype-generic paths, but couldn’t see a good way of avoiding them. So in the subsequent SCP version of the paper, I presented an alternative construction of downwards accumulations, which does not go via a definition of paths; instead, it goes directly to the accumulation itself.

As with the efficient version of the MPC construction, it is coinductive, and uses an accumulating parameter to carry in to each node the seed from higher up in the tree; so the downwards accumulation is of type . It is defined as an unfold, with a body

of type

The result of applying the body will be constructed from two components, of types

and

: the first gives the root label of the accumulation and the seeds for processing the children, and the second gives the children themselves.

These two components get combined to make the whole result via a function

This will be partial in general, defined only for pairs of -structures of the same shape.

The second component of is the easier to define; given input

, it unpacks the

to

, and discards the

and the

(recall that

is the labelled variant of

, where

).

For the first component, we enforce the constraint that all output labels are dependent only on their ancestors by unpacking the and pruning off the children, giving input

. We then suppose as a parameter to the accumulation a function

of type

to complete the construction of the first component. In order that the two components can be zipped together, we require that

is shape-preserving in its second argument:

where is the unique function to the unit type. Then, although the

built from these two components depends on the partial function

, it will still itself be total.

The SCP construction gets rid of the “…”s in the MPC construction. It is also inherently efficient, in the sense that if the core operation takes constant time then the whole accumulation takes linear time. However, use of the partial

function to define a total accumulation is a bit unsatisfactory, taking us outside the domain of sets and total functions. Moreover, there’s now only half an explanation in terms of paths: accumulations in which the label attached to each node depends only on the list of its ancestors, and not on the left-to-right ordering of siblings, can be factored into a list function (in fact, a

) mapped over the “paths”, which is now a tree of lists; but accumulations in which left children are treated differently from right children, such as the parallel prefix and tree drawing algorithms mentioned earlier, can not.

Generic downwards accumulations, via derivatives

After another interlude of about a decade, and with the benefit of new results to exploit, I had a “eureka” moment: the linearization of a shape functor is closely related to the beautiful notion of the derivative of a datatype, as promoted by Conor McBride. The crucial observation Conor made is that the “one-hole contexts” of a datatype—that is, for a container datatype, the datatype of data structures with precisely one element missing—can be neatly formalized using an analogue of the rules of differential calculus. The one-hole contexts are precisely what you need to identify which particular child you’re talking about out of a collection of children. (If you’re going to follow along with some coding, I recommend that you also read Conor’s paper Clowns to the left of me, jokers to the right. This gives the more general construction of dissecting a datatype, identifying a unique hole, but also allowing the “clowns” to the left of the hole to have a different type from the “jokers” to the right. I think the explanation of the relationship with the differential calculus is much better explained here; the original notion of derivative can be retrieved by specializing the clowns and jokers to the same type.)

The essence of the construction is the notion of a derivative of a functor

. For our purposes, we want the derivative in the second argument only of a bifunctor; informally,

is like

, but with precisely one

missing. Given such a one-hole context, and an element with which to plug the hole, one can reconstruct the whole structure:

That’s how to consume one-hole contexts; how can we produce them? We could envisage some kind of inverse of

, which breaks an

-structure into an element and a context; but this requires us to invent a language for specifying which particular element we mean—

is not injective, so

needs an extra argument. A simpler approach is to provide an operator that annotates every position at once with the one-hole context for that position:

One property of is that it really is an annotation—if you throw away the annotations, you get back what you started with:

A second property relates it to —each of elements in a hole position plugs into its associated one-hole context to yield the same whole structure back again:

(I believe that those two properties completely determine and

.)

Incidentally, the derivative of a bifunctor can be elegantly represented as an associated type synonym in Haskell, in a type class

of bifunctors differentiable in their second argument, along with

and

:

Conor’s papers show how to define instances of for all polynomial functors

—anything made out of constants, projections, sums, and products.

The path to a node in a data structure is simply a list of one-hole contexts—let’s say, innermost context first, although it doesn’t make much difference—but with all the data off the path (that is, the other children) stripped away:

This is a projection of Huet’s zipper, which preserves the off-path children, and records also the subtree in focus at the end of the path:

Since the contexts are listed innermost-first in the path, closing up a zipper to reconstruct a tree is a over the path:

Now, let’s develop the function , which turns a tree into a labelled tree of paths. We will write it with an accumulating parameter, representing the “path so far”:

Given the components of a tree and a path

to its root,

must construct the corresponding labelled tree of paths. Since

and

, this amounts to constructing a value of type

. For the first component of this pair we will use

, the path so far. The second component can be constructed from

by identifying all children via

, discarding some information with judicious

s, consing each one-hole context onto

to make a longer path, then making recursive calls on each child:

That is,

Downwards accumulations are then path functions mapped over the result of . However, we restrict ourselves to path functions that are instances of

, because only then are there common subexpressions to be shared between a parent and its children (remember that paths are innermost-first, so related nodes share a tail of their ancestors).

Moreover, it is straightforward to fuse the with

, to obtain

which takes time linear in the size of the tree, assuming that and

take constant time.

Finally, in the case that the function being mapped over the paths is a as well as a

, then we can apply the Third Homomorphism Theorem to conclude that it is also an associative fold over lists. From this (I believe) we get a very efficient parallel algorithm for computing the accumulation, taking time logarithmic in the size of the tree—even if the tree has greater than logarithmic depth.

Isn’t the type of scanl in the first paragraph of code incorrect? That is, the last type should be [alpha] rather than just alpha.

Oops. Fixed, thanks. (And I’ve swapped the alpha and betas for consistency with what follows.)